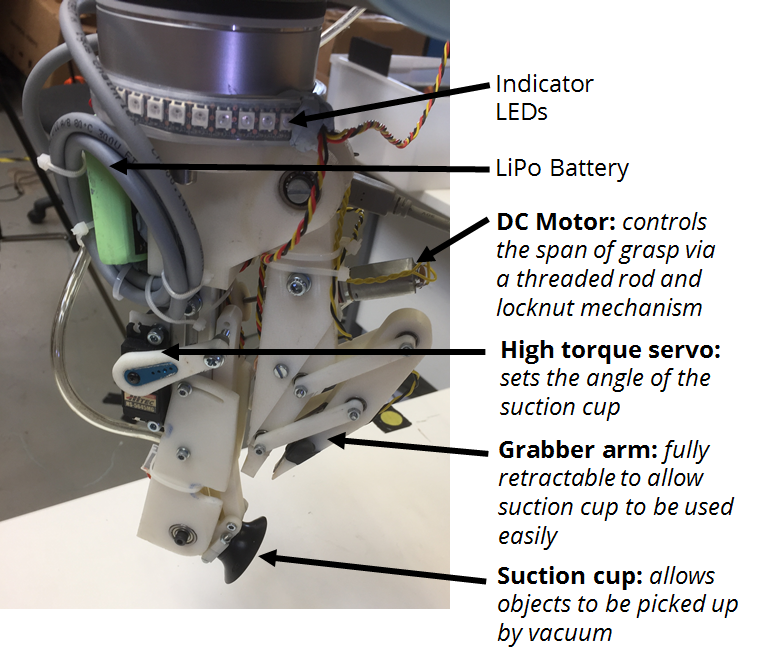

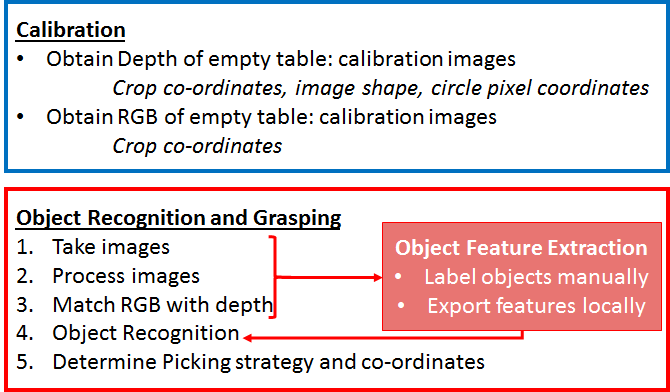

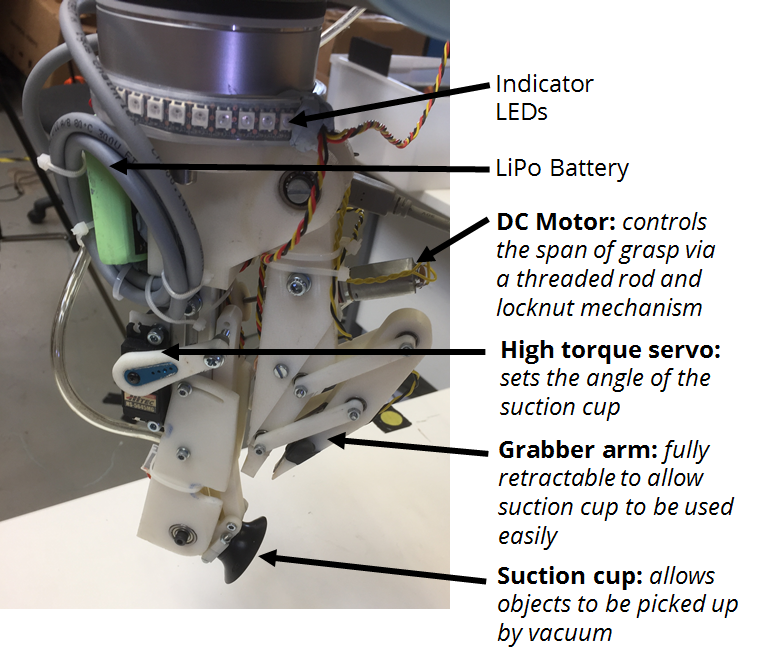

The end effector has been designed to operate in two mains modes: suction and grabbing to allow a large number of objects to be manipulated. The gripper has three sources of actuation allowing:

- Variation in grasping diameter

- Variation of angle of suction head

- Grasping arm actuation

A labelled picture of the manipulator developed is shown below:

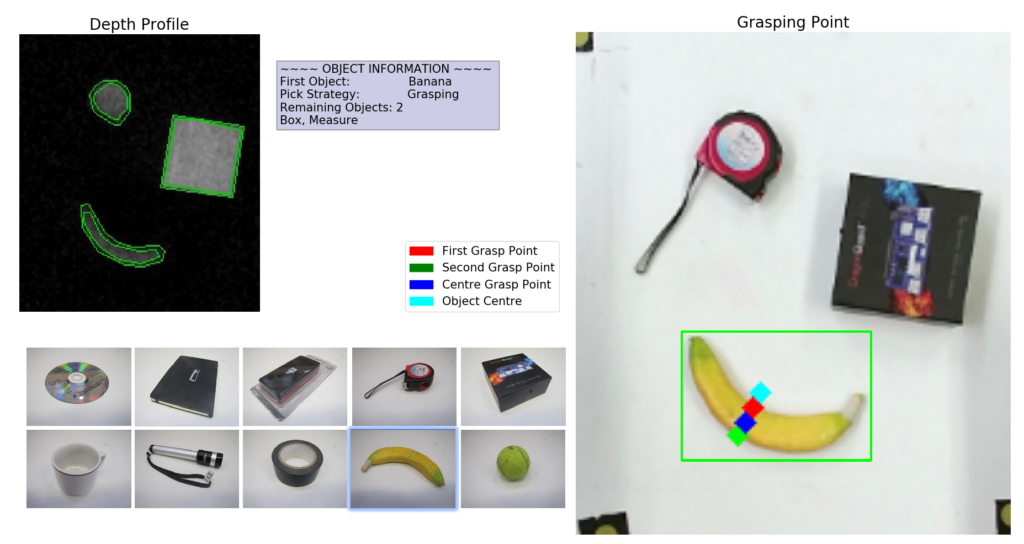

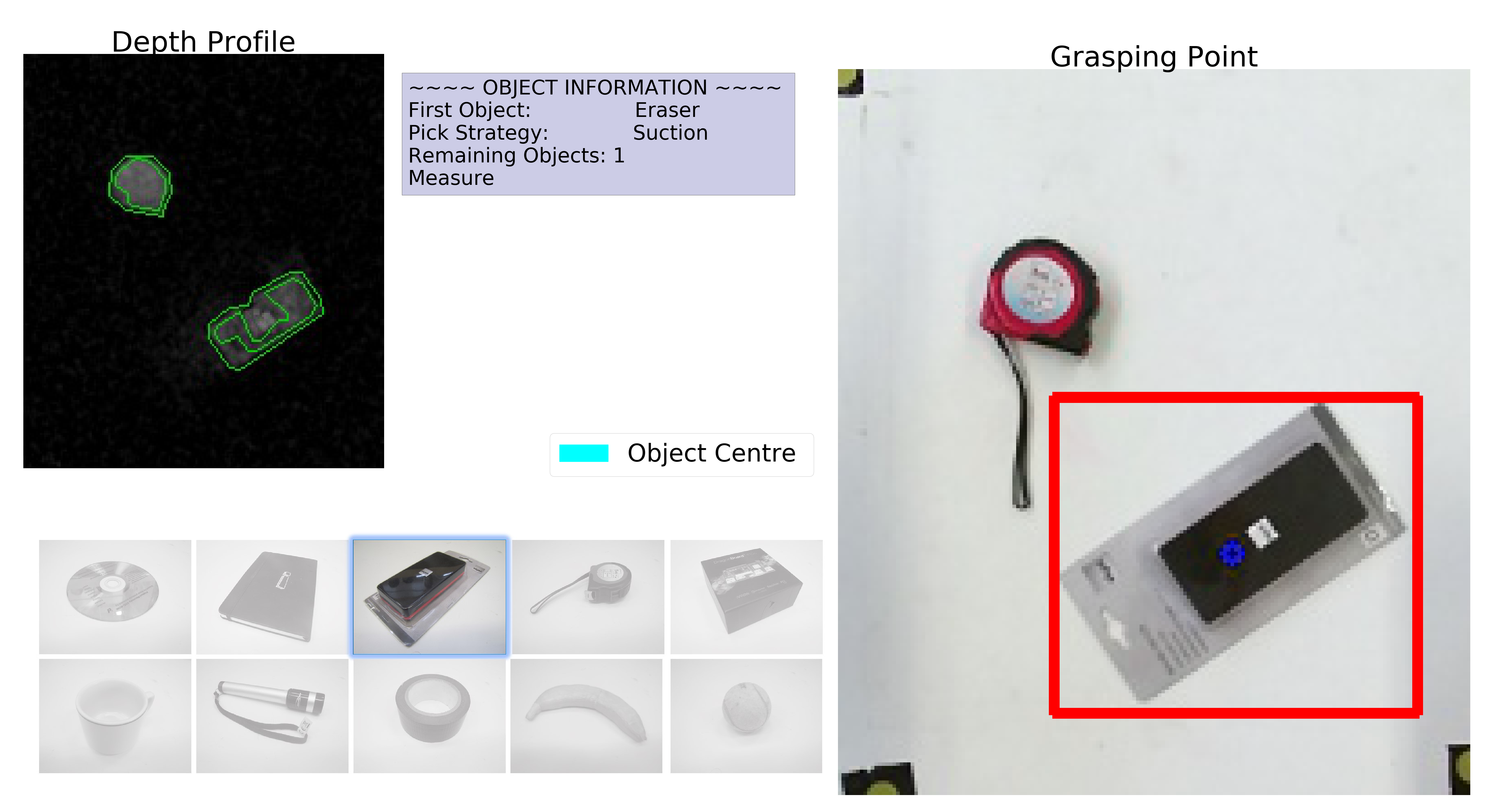

The specific grabbing strategies developed for some objects is now demonstrated.

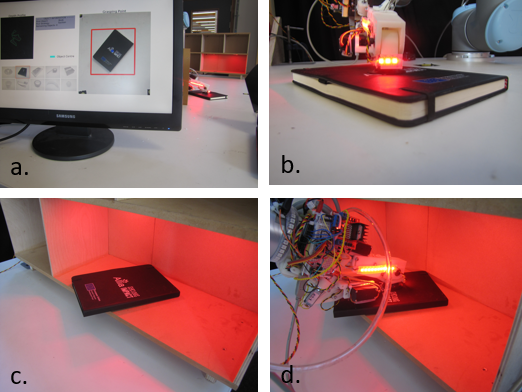

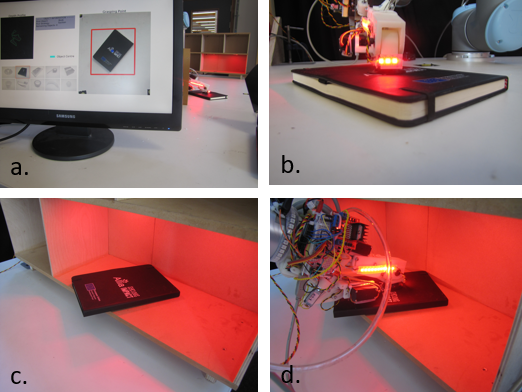

Picking and stowing a book

a. The suction cup is lowered in the middle of the object, b. until it is detected that the object is in contact with the suction cup, c. the object is placed on the shelf, d. suction is used in the centre of the object to pick from the shelf.

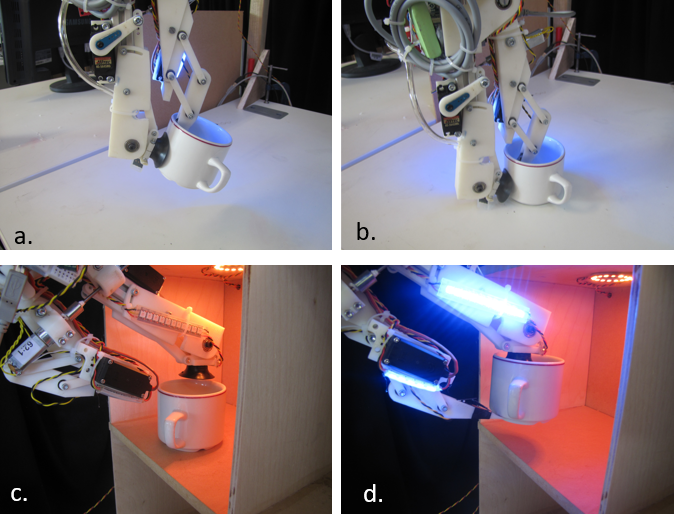

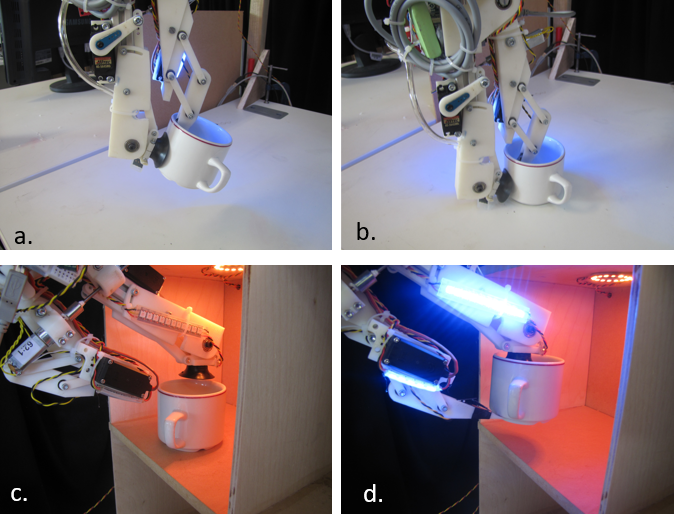

Picking and stowing a mug

a. The cup is grasped between the suction cup and the grasping arm, b. lifted, c. placed on the shelf, d. the cup is then dragged to the front of the shelf and the manipulator arm moved underneath to cradle the mug.

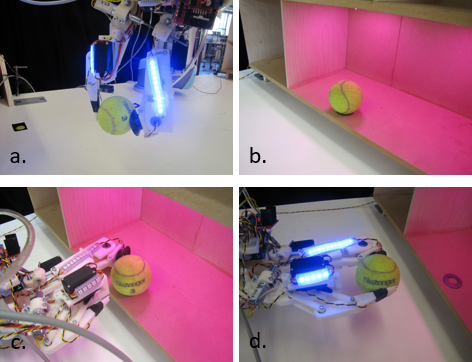

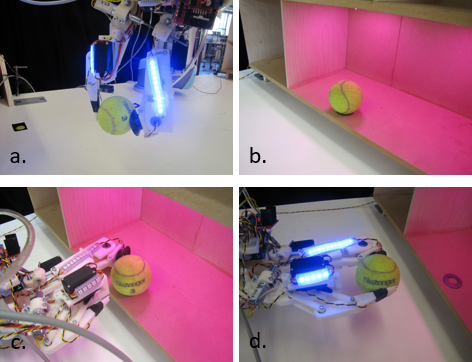

Picking and Stowing the Ball

a. The ball is grasped and lifted, b. placed on shelf without rolling, c. grabber is aligned with ball, d. lifted.

The manipulator is controlled using an Arduino, connected to valves and motors which communicates with the main PC over serial. A block diagram of the system is shown below.

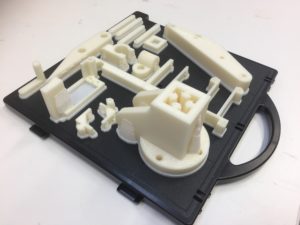

Much of the system has been produced using rapid prototyping methods allowing rapid testing and development. The CAD files are provided in the resources section.

Using this method, we were able to develop and test a number of different manipulator solutions rapidly.